What is a pixel - photo tips

- History of pixel photo development

- CRT innovation

- Definition of pixels

- How do pixels work

- Liquid crystals

- What is resolution in photography?

- What does megapixel means?

- How to tell how many pixels an image is?

- JPEG or PNG better quality interactions

The word pixel comes from a picture element. It begins all the way back in 1839 when the first practical commercially available process of photography was introduced. It was called the Daguerreotype - a photographic process of image creation via a list of certain chemicals. But photography only continued to improve from there and soon the daguerreotype was obsolete.

History of pixel photo development

Photography was pretty much black and white until the first permanent color photograph was taken in 1861 by a man named James Clerk Maxwell.

What he did was capture three black and white images each through a different filter: Red Blue and Green. By projecting each of these images back through their respective colored filters and onto a screen the final colored image was able to be reconstructed. This process of capturing just the primary colors of red green and blue light works so well that we still use it to this day.

Because Red Green and Blue are primary additive colors you can mix them together in different proportions to achieve any color you like.

Continuing along the timeline in 1926 a man named John logie bear demonstrated the first televised moving images using a mechanical television set that used a rapidly rotating Nipkov scanning disk. it was grayscale and limited to 12.5 frames a second and just 30 lines of resolution. But it was very impressive for the time. At this point, we are measuring resolution in lines not in pixels yet, because they have not been involved but we are getting closer to pixel meaning.

CRT innovation

Later in 1927, Philo T Farnsworth demonstrated the completely electronic cathode ray tube television set. The CRT was definitely superior to the mechanical TV sets. It worked on the principle of bombarding the screen with an electron gun that produced lines forming a picture so fast that you can not even see it with the naked eye. In 1950 colored TV was involved and the difference was in having 3 electron guns inside one for each of the primary colors. The beams would hit an array of colored phosphors called triads. But these triads were still not quite pixels. The color TV standard at the time was 512 distinct horizontal lines.

Definition of pixels

It has been until the digital age that those video lines were further sliced into rectangles which made the digital representation of an image possible. And thus the pixel definition was finally born.

Today there are different types of pixels that come in a variety of shapes and sizes on a variety of screens like plasma OLED and LCD displays which have rendered CRTs mostly obsolete. Pixels have continued to get smaller and smaller with better frame rates and better color depth. Now we know what is a pixel, now let us see how it works!

How do pixels work

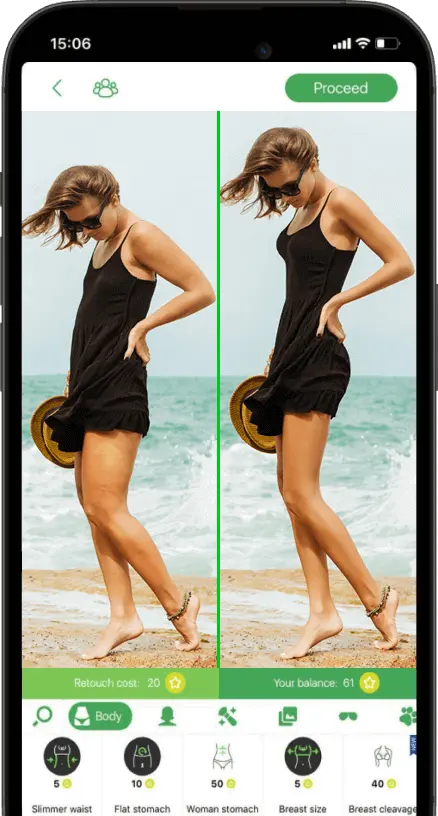

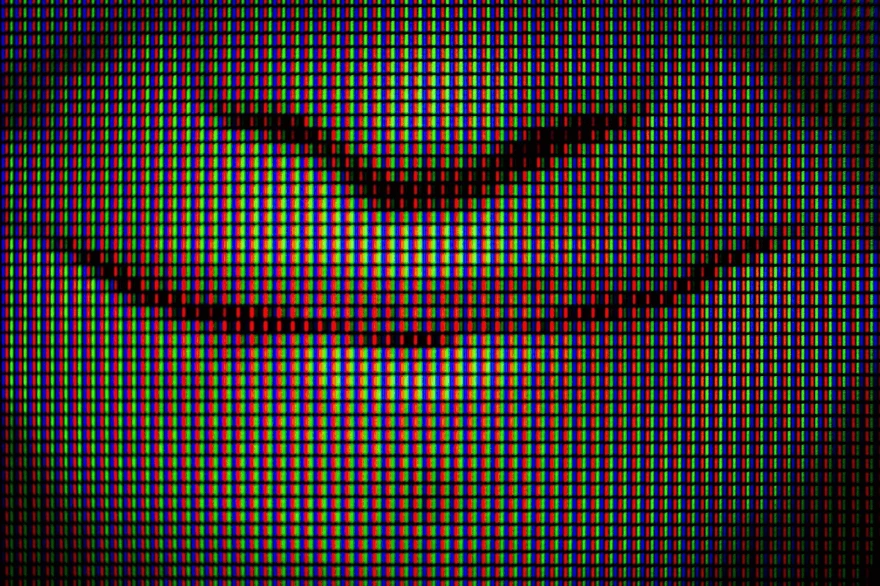

If you are to zoom in on the monitor you would see thousands of tiny red green and blue dots. Three of these grouped together make up one picture element or pixel for short. When you split up each pixel you will see a backlight, three color filters, and a polarizer.

As light leaves the backlight it travels along different planes including the horizontal and the vertical planes. The first polarizer only allows light to travel along the horizontal plane and to pass through it onto the color filters. With another polarizer that only allows light to pass along the vertical axis all the horizontal light waves are blocked, so no light reaches the color filters. This is where liquid crystals come into play.

Liquid crystals

If we look closely at one of these liquid crystals we see a transparent electrode in the front and the back as well as edged glass on the front and the back. Liquid crystals typically orient themselves in random directions until the horizontally edged glass in the rear and the vertically edged glass in the front force them to twist in a predictable pattern. As light passes through the compression of liquid crystal it naturally follows the pattern of molecules. So that any light traveling along the horizontal plane exits along the vertical plane. By reintroducing the electrodes passing electricity through them we can get molecules to align themselves in the direction of eclectic flow causing light to no longer twist when passing through the liquid crystal.

When we place these crystals back into the pixel we can see that the light from the backlight will pass through the horizontal filter, get twisted to a vertical position by the liquid crystals, and then flow through the vertical polarizer to the color filters. However, If we turn on the electrodes then the light is no longer twisted by the liquid crystals and stops at the vertical polarizer. By adjusting the amount of electricity that flows through each set of electrodes, we can control how much light reaches each color filter and what color is seen on a display.

We generally talk about colors on a display in terms of how bright the red green and blue are in each pixel from 0 to 255. If all three numbers are zero the color on the display appears black.

And if all of three are 255 then the display appears white.

As you increase the amount of red green and blue in each pixel you will see different colors appear on the screen. The monitor adjusts the amount of electricity flowing through each liquid crystal one by one, row by row around 60 times per second which refers to your monitor’s refresh rate.

What is resolution in photography?

Resolution in photography is basically the number of image pixels you have in your photograph. It can be displayed as a single number or as a multiplication. The multiplication is where it says something like 6000 by 4000 or 3840 by 2160. These numbers represent how many pixels are there run along the top of the image (width) and how many pixels run down the side of the image (height).

What does megapixel means?

If it is a single number it is the total amount of pixels in your image shown as a multiplication of represented numbers. 3840x2140 = 8 294 400 pixels in total. But this number is too big that is why we shorten it down to 8 megapixels. One million pixels is how many pixels are in a megapixel.

How to tell how many pixels an image is?

If we right-click on an image and go to image properties → details here we can see how many pixels it is. In different apps, it could be shown differently. For example 6000x4000 (24.0 MP) - 240 PPI. PPI is pixels per inch printed on paper, a property responsible for for printing resolution. For instance, a 300 PPI value stands for a normal photo size print representing a high-quality image. We can increase resolution of image we print by increasing PPI. The higher the resolution the better image quality is. But that is not the only parameter responding to image quality.

JPEG or PNG better quality interactions

As we said before, resolution and PPI stand for quality measures. But these parameters are not the only ones corresponding to image quality. Different file formats are also responsible for image quality. For instance, if we have an image that has a lot of contrasts and lack of colors, PNG format will make lines look sharper and decrease rendered file’s total size on a computer represented as bits of information. On the other hand, JPEG is a standard for images containing a lot of color gradients. Learn more about file formats and their differences in this article.

He started his career as a professional photo designer and retoucher. Professional commercial photographer with 20 years of experience. He is a leading advertising photographer and has worked as a food photographer with Michelin-starred chefs. His work with models can be seen on the calendars of many leading companies in Ukraine. He was the owner of the photo studio and photo school "Happy Duck".

with RetouchMe