How to generate images with ControlNet

- Introduction to ControlNet AI

- Understanding ControlNet Architecture and Features

- Multi-Model Integration

- Real-Time Adjustment Layers

- Feedback Loops

- Advanced Data Handling

- Computational Efficiency

- Generating Images with ControlNet

- How to Install ControlNet

- Download models

- UI and Tools Overlook

- Conclusion

The landscape of AI image generators have expanded to the state that it is now an undeniable tool in different forms of art allowing to ease the workflow of the artists. With our previous article on Stable Diffusion we explained how AI image generators work based on diffusion models. And now it has developed even further with ControlNet - a network that unifies multiple models to work together allowing the fine-tuning of your generated images. In this guide, we explore what is ControlNet, its architecture, see how it works, and explain how to instal the UI to use this powerful tool in generating images on your own.

Introduction to ControlNet AI

ControlNet represents a cutting-edge development in the field of AI-driven image generation. Unlike more traditional image synthesis models, ControlNet is designed to not only generate images but also to provide significant control over various attributes of these images during the generation process. This makes it particularly valuable in fields requiring detailed customization, such as digital art, simulation, and augmented reality.

The core premise behind ControlNet AI is its ability to handle multiple models simultaneously, particularly variations of the Stable Diffusion model, allowing it to modify specific properties of the generating image. This could involve adjusting aspects such as style, color palette, texture, and even incorporating elements from multiple genres or artistic movements into a single coherent output. The flexibility of ControlNet extends to real-time modifications, offering users the possibility to interact with the image generation process directly, guiding the AI to produce desired outcomes with unprecedented precision.

This level of control and customization is achieved through a combination of advanced neural network architectures and a sophisticated handling of the generative models it supports. The integration of these technologies allows ControlNet to push the boundaries of what is currently possible in AI-driven creativity, making it a powerful tool for creators across various industries.

Understanding ControlNet Architecture and Features

The architecture of ControlNet AI is fundamentally designed to support its dual goals of high-quality image synthesis and deep customization. At its core, ControlNet employs a complex arrangement of neural networks that manage and manipulate multiple generative models, primarily Stable Diffusion models. Each component within the architecture serves a specific function, from generating the initial image to refining and adjusting it according to user inputs.

Multi-Model Integration

ControlNet's ability to integrate and manage multiple Stable Diffusion models simultaneously is central to its architecture. This multi-model setup allows the system to draw upon different trained models for different aspects of image generation, such as texture, lighting, and object placement. By leveraging these models in concert, ControlNet can produce more complex and nuanced images than would be possible with a single model.

Real-Time Adjustment Layers

To facilitate user interaction and real-time adjustments, ControlNet includes layers specifically designed for real-time feedback and modification. These layers can interpret user inputs and translate them into adjustments in the image generation process. This might involve scaling certain features, altering color schemes, or shifting the compositional focus, all while the generation process is still ongoing.

Feedback Loops

Integral to ControlNet's architecture are feedback loops that allow for continuous improvement and refinement of the generated images. As the system outputs an image, it can also take feedback—either from the user or through its own analysis—to refine the image further. This feedback can be based on predefined quality metrics or specific user preferences.

Advanced Data Handling

The effective handling of input data, such as image datasets, user preferences, and historical outputs, is crucial for the performance of ControlNet. This includes sophisticated data preprocessing to ensure that inputs are optimally formatted for use in the generation process, as well as efficient data storage solutions to quickly access and utilize past outputs and user interactions.

Computational Efficiency

Given the complexity of handling multiple models and real-time user interactions, ControlNet's architecture is optimized for computational efficiency. This involves using state-of-the-art techniques in computational optimization and hardware acceleration, such as GPU utilization and distributed computing frameworks.

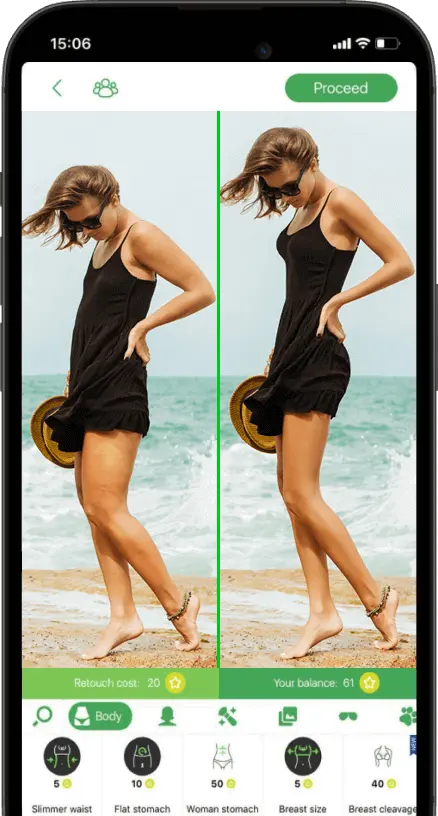

Generating Images with ControlNet

So how to use controlnet? To start generating images with ControlNet you need to get acces to the ControlNet for Stable Diffusion WebUI which is called AUTOMATIC 1111. It is a user interface for Stable Diffusion – a text-to-image model developed by Stability AI. This is possible on git, so you basically can google it and click on the first link shown.

How to Install ControlNet

As you get access on git, then you need to open the extensions tab → go to the ‘instal from the URL’ tab and paste the url available there. Then press the install button. As installed, you will see the message says that ControlNet is installed into Stable Diffusion. Then you need to check for updates and restart the UI from the ‘Installed’ tab.

Download models

You will also need to download some models for controlNet to work. This could be retrieved from hugging face. Once downloaded, the models will need to be placed within the extension’s model folder and you only need the files ending in dot PTH. Then the control net will automatically download any preprocessors which you don’t have when trying to run them for the first time within the extension.

Once all of this is done you should have ControlNet installed and the models we downloaded showing up within the corresponding drop-down list.

UI and Tools Overlook

As you open the ControlNet UI you will see plenty of functions here to start generating images. The first thing you want to do is to check the ‘Enable’ box to make the extension active. Then you place your reference image in the ‘Single Image’ tab by dragging and dropping the image into the box. Within the ‘Single Image’ box you will have tools such as masking, brush, undo, eraser and a delete button. Let’s see the other features that are the most important to elaborate on:

- Control type: Below the image box, you will see a set of tools called ‘Control Type’ by selection of which the UI will showcase the data it pulls from the image to generate a new one. This is how you create the control maps from reference images.

- Multi-units processing: ControlNet has multiple units which allow you to utilize multiple control maps and settings to influence a single generated image. This gives you even more control over your creation process. For example, you can use both ‘Canny’, ‘Depth’, and ‘OpenPose’ at the same time to influence your generated image instead of using just one control type. at a time.

- Batch-Processing: Batch lets you generate multiple images at a time by specifying a folder containing the reference images.

- Low VRAM function: Enable this feature if you are running less than 6 gigabytes of VRAM on your PC. This feature helps to optimize the processing in this regard.

- Pixel-Perfect: This feature ensures that the control maps generated from your reference images are more accurate to your reference image by allowing ControlNet to automatically set the resolution to match the reference image. With this enabled, you won’t need to set a preprocessor resolution as it’s done for you.

- Allow Preview option: This option lets you preview the control maps effect on a provided image allowing you to see what will be applied before committing to a full image generation.

- Control Mode : This option allows you to specify whether your prompt or ControlNet should be prioritized based on three options including the balanced one. Play with the setting to see the difference in outcome.

- Resize mode: Resize mode determines how the input picture and control map is resized in relation to the resolution you specified for the image to be generated. Just the ‘Resize’ will resize the image without cropping or filling your image usually by stretching and pulling you image to match the desired size. Crop and resize will take the generated image and resize it by cropping it to the specified resolution. Resize and fill will resize the image by filling the remaining space to make up the desired resolution of your image.

- Presets: Allow you to save and load your ControlNet settings making it easy to recreate your favoriute configurations. This can be done by setting up your ControlNet with the settings you like and pressing the ‘Save’ icon then providing a name and saving your preset. You may need to hit blue refresh icon for the preset to show up and you can also delete preset by pressing the bin icon.

Conclusion

With AI image generators evolving you can now use it to add fun photo to your collection inspired by an anime character and apply its features on your own photograph/selfie. Options here are limitless and with future research the AI will advance even more to the point we can not tell the difference between human and AI arts at all. As for now, this is a convenient tool that artists may use for their general workflow saving time and effort while adding their touches to the main project with a comprehensive setting tool utilizing ControlNet and Stable Diffusion models.

Co-founder of RetouchMe. In addition to business, he is passionate about travel photography and videography. His photos can be viewed on Instagram (over 1 million followers), and his films can be found on his YouTube channel.

Moreover, his profile is featured on the most popular and authoritative resource in the film industry — IMDb. He has received 51 international awards and 18 nominations at film festivals worldwide.

with RetouchMe